Google’s Gemini Powers Breakthroughs in Autonomous Robotics

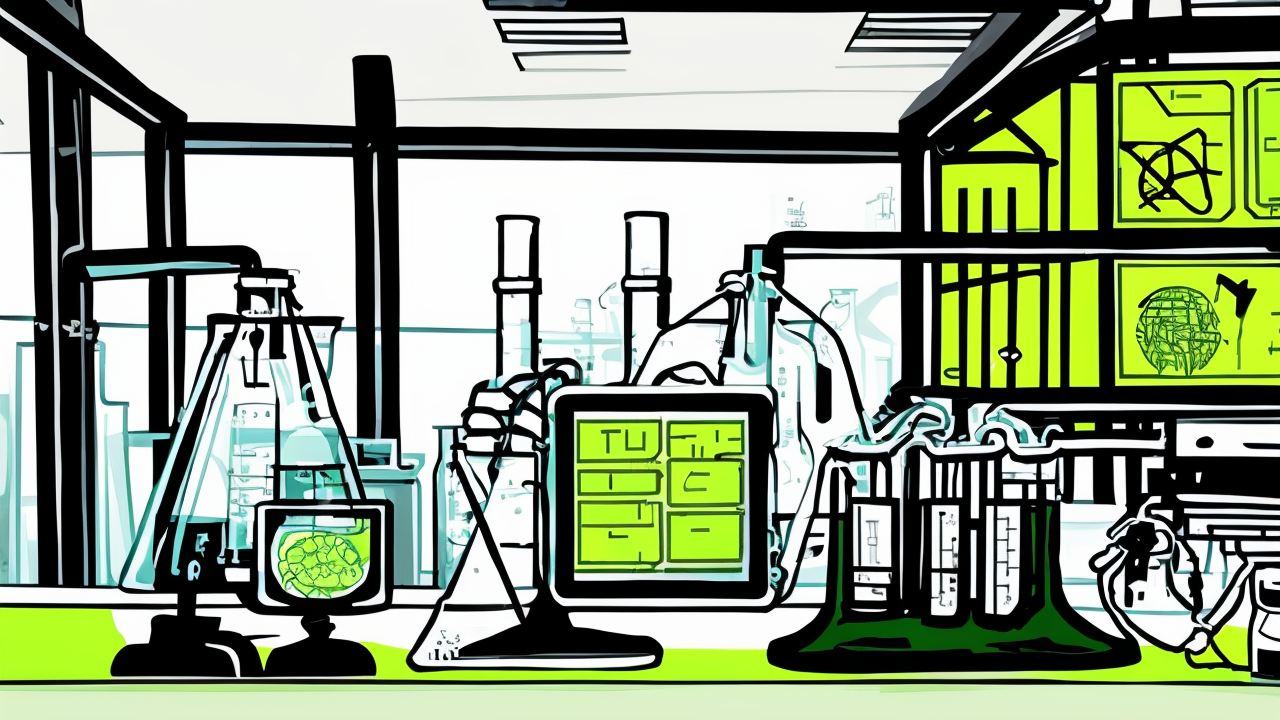

Google DeepMind has unveiled a new on-device Vision-Language-Action (VLA) model for robotics, enabling robots to operate with full autonomy. This advancement builds on Gemini Robotics, announced earlier this year, by eliminating the need for cloud-based processing. Carolina Parada, head of robotics at Google DeepMind, highlighted that this approach enhances reliability, particularly in challenging environments.

The new model allows robots to react quickly to commands, addressing a key limitation of previous hybrid systems. While the hybrid version combined a local model with a cloud-based system for complex tasks, the standalone VLA model offers surprising robustness, with accuracy comparable to its predecessor. Developers can now adapt the model for specific use cases with minimal demonstrations, typically involving manual task execution to train the system.

Safety remains a priority, with Parada emphasizing the importance of multi-layered safety protocols. Developers are encouraged to integrate safety measures, including connecting to the Gemini Live API and implementing low-level controllers for critical checks.

The on-device model is ideal for environments with limited connectivity, such as healthcare settings, where privacy is paramount. With Gemini 2.5 on the horizon, Parada predicts further advancements in robotics capabilities.

Interested developers can apply for Google’s trusted tester program to explore and refine the technology, marking a significant step forward in autonomous robotics innovation.

Published: 6/24/2025